Exemplar-Based Inpainting for 6DOF Virtual Reality Photos

IEEE Transactions on Visualization and Computer Graphics (TVCG)

(Special Issue of TVCG for IEEE ISMAR 2023)

Best Journal Paper Nominee at IEEE ISMAR 2023

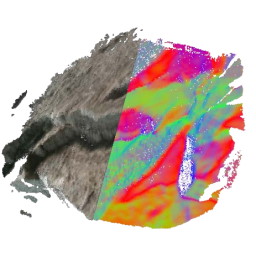

Multi-layer images are currently the most prominent scene representation for viewing natural scenes under full-motion parallax in virtual reality. Layers ordered in diopter space contain color and transparency so that a complete image is formed when the layers are composited in a view-dependent manner. Once baked, the same limitations apply to multi-layer images as to conventional single-layer photography, making it challenging to remove obstructive objects or otherwise edit the content. Object removal before baking can benefit from filling disoccluded layers with pixels from background layers. However, if no such background pixels have been observed, an inpainting algorithm must fill the empty spots with fitting synthetic content. We present and study a multi-layer inpainting approach that addresses this problem in two stages: First, a volumetric area of interest specified by the user is classified with respect to whether the background pixels have been observed or not. Second, the unobserved pixels are filled with multi-layer inpainting. We report on experiments using multiple variants of multi-layer inpainting and compare our solution to conventional inpainting methods that consider each layer individually.

Related Projects

Multi-layer Scene Representation from Composed Focal Stacks

Multi-layer images are a powerful scene representation for high-performance rendering in VR/AR. We propose using a focal stack composed of multi-view inputs to diminish local noises in input images from a user-navigated camera and improve coherency over view transitions. Our results demonstrate the advantages of using focal stacks in coherent rendering, memory footprint, and AR-supported data capturing.

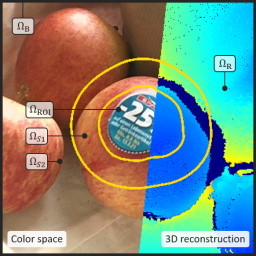

InpaintFusion: Incremental RGB-D Inpainting for 3D Scenes

Inpainting meets dense SLAM to interactive AR after 3D inpainting. We perform RGB-D inpainting in a keyframe with a given user-specified 3D volume. The proposed system, InpaintFusion, automatically finds subsequent keyframes and performs RGB-D inpainting while keeping spatial coherence between multiple inpainted keyframes. All keyframes are fused as a dense map for a full 3D experience.

Good Keyframes to Inpaint

Finding a good keyframe for InpaintFusion can be difficult for users. Our data-driven approach, Good Keyframes to Inpaint, can find the best one for you. We established six heuristic rules that need to be fulfilled and formulated them in implementable ways. Given an image dataset, we find the optimal balancing parameters for the formulations.

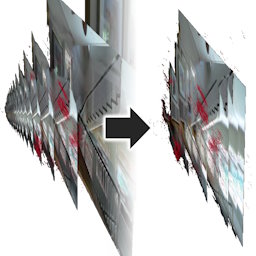

Compacting Singleshot Multi-Plane Image via Scale Adjustment (PDF)

Recent advances in neural networks enable to infer a multiplane image from just a single image. However, such singleshot multiplane image generators lack the clue of depth scale by nature. Augmented reality applications keep tracking the scene using IMU, and thus they can provide metric depth. We, therefore, use such depth information to re-scale singleshot multiplane images. We noticed that our optimization algorithm finds planes at very close depths and we merge them into one for more compact data.

Bibtex

@article{Mori2023FS2MPI,

author={Mori, Shohei and Schmalstieg, Dieter and Kalkofen, Denis},

journal={IEEE Transactions on Visualization and Computer Graphics (TVCG)},

title={Exemplar-Based Inpainting for 6DOF Virtual Reality Photos},

volume={29},

number={11},

pages={4644--4654},

year={2023},

doi={10.1109/TVCG.2023.3320220}

}