Multi-layer Scene Representation from Composed Focal Stacks

IEEE Transactions on Visualization and Computer Graphics (TVCG)

(Special Issue of TVCG for IEEE ISMAR 2023)

Best Journal Paper at IEEE ISMAR 2023

Multi-layer images are a powerful scene representation for high-performance rendering in virtual/augmented reality (VR/AR). The major approach to generate such images is to use a deep neural network trained to encode colors and alpha values of depth certainty on each layer using registered multi-view images. A typical network is aimed at using a limited number of nearest views. Therefore, local noises in input images from a user-navigated camera deteriorate the final rendering quality and interfere with coherency over view transitions. We propose to use a focal stack composed of multi-view inputs to diminish such noises. We also provide theoretical analysis for ideal focal stacks to generate multi-layer images. Our results demonstrate the advantages of using focal stacks in coherent rendering, memory footprint, and AR-supported data capturing. We also show three applications of imaging for VR.

Related Projects

Exemplar-Based Inpainting for 6DOF Virtual Reality Photos

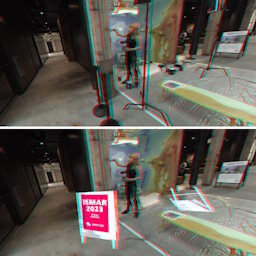

We demonstrated how to implement exemplar-based inpainting on recent multi-layer scene representation. Multiple variants including different patch evaluation and imaging strategies were implemented and evaluated in user-involved studies to discuss superiority and future challenges. The left image shows snapshots from our VR system displaying the input multi-layer scene and the inpainted + edited scene in anaglyph colors for stereoscopic view.

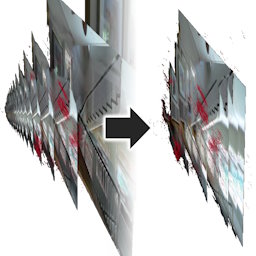

Compacting Singleshot Multi-Plane Image via Scale Adjustment (PDF)

Recent advances in neural networks enable to infer a multiplane image from just a single image. However, such singleshot multiplane image generators lack the clue of depth scale by nature. Augmented reality applications keep tracking the scene using IMU, and thus they can provide metric depth. We, therefore, use such depth information to re-scale singleshot multiplane images. We noticed that our optimization algorithm finds planes at very close depths and we merge them into one for more compact data.

Bibtex

@article{Ishikawa2023FS2MPI,

author={Ishikawa, Reina and Saito, Hideo and Kalkofen, Denis and Mori, Shohei},

journal={IEEE Transactions on Visualization and Computer Graphics (TVCG)},

title={Multi-layer Scene Representation from Composed Focal Stacks},

volume={29},

number={11},

pages={4719--4729},

year={2023},

doi={10.1109/TVCG.2023.3320248}

}