Video See-Through Mixed Reality with Focus Cues

Transactions on Visualization and Computer Graphics (TVCG)

Best Journal Paper at IEEE VR 2022

TVCG Session on VR (Invited Talk) at SIGGRAPH 2022

IEEE TVCG VR Session (Invited Talk) (Invited Talk) at SIGGRAPH Asia 2022

VR and AR Theme (Invited Talk) at FiO+LS 2022

Graz University of Technology

Graz University of Technology

Graz University of Technology

Stanford University

Graz University of Technology

Stanford University

Graz University of Technology

Abstract

This work introduces the first approach to video see-through mixed reality with full support for focus cues. By combining the flexibility to adjust the focal distance found in varifocal designs with the robustness to eye-tracking error found in multifocal designs, our novel display architecture reliably delivers focus cues over a large workspace. In particular, we introduce gaze-contingent layered displays and mixed reality focal stacks, an efficient representation of mixed reality content that lends itself to fast processing for driving layered displays in real-time. We thoroughly evaluate this approach by building a complete end-to-end pipeline for capture, render, and display of focus cues in video see-through displays that uses only off-the-shelf hardware and compute components.

Prototype

Our video see-through display prototype can be built with off-the-shelf components in a relatively small form factor. Click on the prototype to explode the view. The display consists of two LCDs per eye which are combined by a beam splitter. Focus tunable lenses allow to shift the virtual image of the LCDs to the vergence distance of the user, which is measured by a binocular eye tracker. For capturing, we use industrial cameras. The focus distance of the cameras is controlled by focus tunable lenses. Additionally, we include a 6DoF tracker, and a hand tracker in our prototype.

Example Results

These example results show simulations of two scenes with user front and back focus. Our software pipeline allows us to augment a captured focal stack with a rendered focal stack. Drag the slider to compare between front and back focus. Use the button on the bottom to toggle between viewing the mixed focal stack and the captured focal stack.

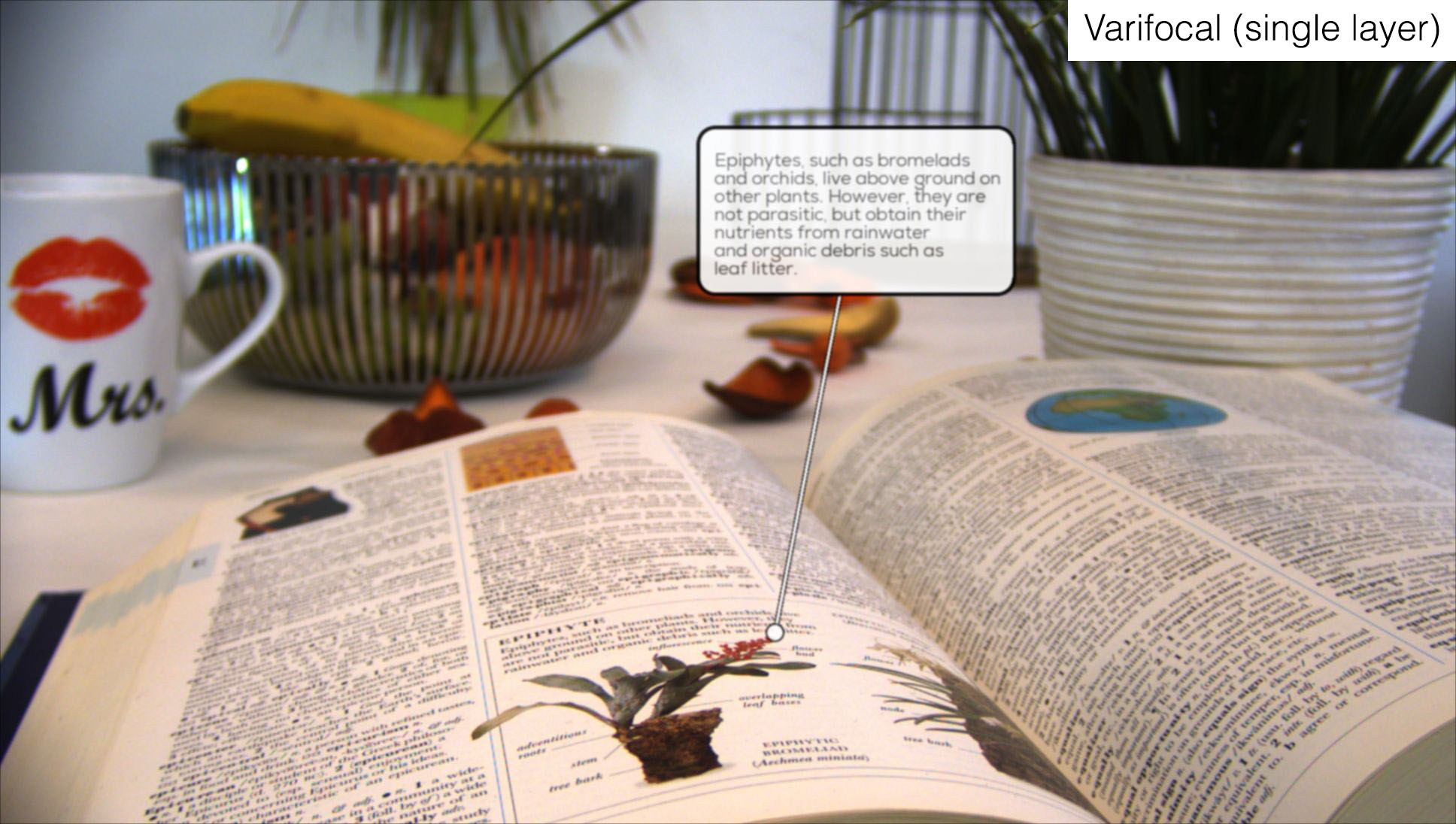

Comparison to Single Layer Varifocal

Eye Tracking Error 0.4 Diopter

The dual layer varifocal setup allows our prototype to be tolerant against eye tracking errors. The accuracy of current generation eye trackers is about 0.5° - 1°, which results in vergence measurement offsets of about 0.3D - 0.6D (see our paper for details). Use the slider on the bottom to adjust the eye tracking error and drag the image slider to compare our setup against a conventional varifocal display.

Citation

@article{ebner2022vst,

author={Ebner, Christoph and Mori, Shohei and Mohr, Peter and Peng, Yifan and Schmalstieg, Dieter and Wetzstein, Gordon and Kalkofen, Denis},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={Video See-Through Mixed Reality with Focus Cues},

year={2022},

volume={28},

number={5},

pages={2256-2266}

}